There has been a response to my investigation into Iraq Body Count (IBC) and the people behind it — and longstanding Guardian columnist George Monbiot himself has popped out of the woodwork to give it his official thumbs-up.

The article in question is my INSURGE intelligence piece, “How the Pentagon is hiding the dead,” which critically examines the claims of IBC and IBC-affiliated scholars about the death tolls in Iraq, as well as in other conflicts, mainly Afghanistan and Colombia.

Monbiot, a journalist for whom I have much respect, couldn’t bring himself to say a word in public after news of how my contract was unilaterally terminated by The Guardian for writing on my environment blog about the role of Gaza’s gas in motivating Israel’s military offensives.

But an attack on my critique of Iraq Body Count was enough to break the silence.

My investigation into Iraq Body Count, Monbiot trumpeted on Twitter, is “pernicious bullshit,” which has received a “devastating take-down” by Brian Dean, who runs a blog called ‘News Frames’ and who has apparently been a vocal supporter of Iraq Body Count for many years.

But News Frames’ response fails to address the key issues: that since 2009, IBC directors John Sloboda and Hamit Dardagan et. al have been funded by the very same US and European government agencies complicit in the crimes of the Iraq War, through their parallel work running the ‘Every Casualty’ project; that the IBC directors and researchers are actively using flawed statistical methodologies to underestimate the impact of US wars in Iraq and Afghanistan (and elsewhere); and that their critiques of epidemiological surveys methods, including the 2006 Lancet death toll survey, are unsubstantiated.

Frame-up

Brian Dean’s approach, according to his blog, is to focus on the way the media unconsciously “frames” things using metaphor and narratives to shape our thinking. He purports to draw on the cognitive-linguistic work of “George Lakoff, etc.”

The “framing” of his own ‘refutation’ of my story is, in itself, revealing of Dean’s modus operandi. He begins by framing the story as an “ugly smear piece,” containing “conspiratorially-framed claims,” relying on “misleading rhetoric.”

Given his obsession with “framing”, the type of language that Brian Dean himself uses to “frame” my work is revealing. He uses the word “smear” 21 times, “rhetoric” 16 times, “misleading” 14 times, “false” 15 times, and so on.

He also “frames” his piece by repeatedly and selectively quoting my negative descriptions of IBC and IBC-related work, entirely out of context and grouped together in blocks, to give the impression that my article is chock full of “misleading rhetoric.”

Below, I go through Brian Dean’s defence of IBC step-by-step. For ease of reference, I’ve inserted his text in bold, before my own responses.

One of Ahmed’s sources, a statistics professor who writes for the Washington Post, has since complained that he was selectively quoted and misrepresented by Ahmed’s article. Another source has complained of exaggerations and errors, suggesting that Ahmed was engaged in “personal attacks” on IBC and its researchers. Ahmed has now written a second piece which responds to these criticisms.

I responded to both those issues here. Dean admits that he read this response, yet omits to mention what I clarified in that response, that I immediately amended and updated my original article.

In fact, Dean selectively misrepresents both these sources, so as to ignore that they recognized my article raised valid questions.

The first source, statistic professor Andrew Gelman, conceded that my analysis and critique may well be perfectly correct:

“Ahmed may be correct that the Burhnam study was performed well and that Spagat’s criticisms are baseless and that my acceptance of Spagat’s criticisms were misinformed — as I wrote above, I know nothing about Iraq. If Ahmed wants to say this, and to paint me as out of touch in my ivory tower, fine… Ahmed’s article discusses how some of the work of Spagat and colleagues was funded by a US government organization with connections to the Defense Department. It’s legitimate to point this out, and in this spirit I will also say that I’ve received National Security Agency funding for some of my research.”

The second source, conflict statistician Dr Patrick Ball, praised my article and expressed gratefulness for addressing the issues:

“We welcome Dr Ahmed’s summary of various points of scientific debate about mortality due to violence, specifically in Iraq and Colombia. We think these are very important questions for the analysis of data about violent conflict, and indeed, about data analysis more generally. We appreciate his exploration of the technical nuances of this difficult field.”

Ahmed’s misleading assertions & falsehoods

Ahmed repeatedly conflates IBC and its output with “IBC-affiliated” persons and projects, leading to false claims about IBC’s funding, misleading assertions about “fraud” and nonsensical inferences which read more like conspiracy theory than logic. Remarkably, he asserts that “all” of IBC’s publications breach ethical standards by not disclosing certain funding:

“The breach is committed with such systematic impunity throughout their academic publication record that it does, indeed, raise serious and fundamental and perfectly legitimate questions about the integrity of their research.”

Here, Dean simply fails to understand the point. Or perhaps wants to misunderstand the point. Dean doesn’t want me to talk about funding received by “IBC-affiliated” persons and projects. They are only “IBC-affiliated,” and therefore such funding raises no issues about IBC.

Unfortunately for Brian Dean and IBC, research funding doesn’t work like that, as I already explained in my previous response to Gelman and HRDAG.

It is a fact that the IBC’s directors have received funding from various Western foreign policy agencies. Dean wants us to believe that this is irrelevant for understanding IBC.

It’s not. It’s highly relevant for understanding the sorts of interests that think that IBC’s work is useful. In this case, many of the same interests supportive of the Iraq War have and are funding the death toll related research of senior IBC directors.

Dean also simply misrepresents my argument. I do not say that “all” of IBC’s publications breach ethical standards. Indeed, I’m not talking about work published by IBC. I am, however, talking about the academic publications authored by various IBC researchers and directors. This is clear from the wider context of what I actually said, which Dean quotes so selectively:

“The failure of IBC authors including Spagat to fully and clearly disclose their institutional affiliations and research funding sources connected to US and European government foreign policy agencies and departments, is an elementary, but fundamental, breach of ethics. The breach is committed with such systematic impunity throughout their academic publication record that it does, indeed, raise serious and fundamental and perfectly legitimate questions about the integrity of their research.”

What IBC itself has published in the form of reports and dossiers is not what I mean by an “academic publication”.

I am referring to the various papers published in scientific and academic journals, where the authors of those papers, which I describe as “IBC authors” due to institutional affiliation with IBC, are in receipt of funding from various Western foreign policy agencies.

Interestingly, Dean is not in the slightest bit interested in the lack of disclosure in any of these papers. IBC authors like Spagat, Sloboda, Dargadan, and so on, were linked to projects that received funding from US, Swiss, German and Norwegian government foreign affairs agencies, during the periods in which many of their academic articles were published. Yet they chose not to disclose those funding sources, despite the risk of a conflict of interest due to the nature of the funding received. The failure to disclose these sources of research funding in itself raises serious questions.

The funding (from sources “connected to US and European government foreign policy agencies and departments”) which Ahmed believes should have been “disclosed” by IBC and/or its publications was not, in fact, received by IBC or any of its publications.

My story does not claim that the IBC as an institution received funding from US and European governments — it shows conclusively that such funding was received, however, by IBC directors through related organizations and research programmes they were involved in, namely the Oxford Research Group’s casualty recording project, and the Every Casualty project that came out of it.

IBC’s funding is listed on its website. The “IBC”(-related) funding which Ahmed refers to has been openly disclosed by the publications/organisations which it funded — all non-IBC.

My article did not even touch upon the subject of IBC’s own institutional funding.

What Dean calls “The ‘IBC’(-related) funding” — the funding received by IBC directors from other projects/programmes that, however, were closely related to the same subject, has not been disclosed in the academic publications of IBC affiliated authors.

Dean simply ignores this. He obviously doesn’t care, and ardently wants us not to care either.

For example, he claims — falsely, as it turns out — that IBC’s work is influenced by funding from the United States Institute of Peace (USIP), and builds a picture of USIP as a “neocon front agency” whose “entire purpose is to function as a research arm of the executive branch and intelligence community”. He concludes, as a result of such claims, that IBC “is deeply embedded in the Western foreign policy establishment”.

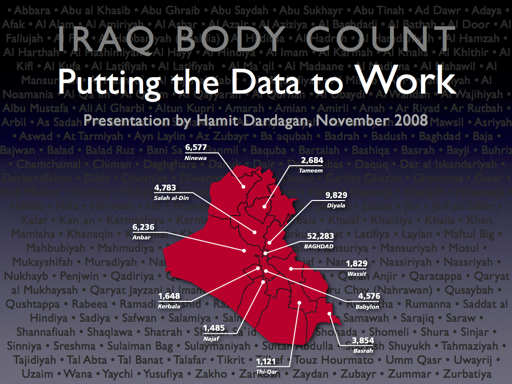

Since 2009 until today, IBC directors John Sloboda and Hamit Dargadan have run casualty counting projects which have received core funding from USIP — that is a period of six years.

Brian Dean uses his sleight-of-hand artificial distinction between IBC’s directors, and IBC as a corporate entity, to pretend that whatever funding Sloboda and Dardagan received is irrelevant to the IBC’s work.

Dean fails to understand that the external interests of directors of a nonprofit company may well bear influence on that company. IBC’s work is overseen by the directors of its company, Conflict Casualties Monitor, which was incorporated in 2008. Those directors have received a significant amount of support and funding from USIP since 2009 through their work in the Oxford Research Group and later the Every Casualty project.

But Dean wants to have his cake and eat it. He wants us to have faith that USIP had no influence on IBC, despite having funded IBC’s directors in separate but closely related projects related to casualty counting for the last six years.

Such faith, however, is far from justified, as we will see below.

But Ahmed doesn’t mention that USIP has funded and repeatedly collaborated with Gilbert Burnham, lead author of the 2006 Lancet Iraq study. Presumably his investigative journalism didn’t stretch that far. Most of the shocking inferences which Ahmed draws from “connections” with agencies such as USIP would also apply to Burnham, as we’ll see. (USIP has also funded other researchers used by Ahmed as authoritative sources — eg Professor Amelia Hoover Green).

Predictably, Dean ignores my discussion of this non-issue in my first response piece, which he admits to having read, but strangely can’t muster the gumption to address in his ‘refutation.’ I wrote:

“USIP’s deep-seated structural ideological biases are in fact widely understood in the peace research community, and have been demonstrated in the peer-reviewed scientific literature… Prof. Sreeram Chaulia, for instance, in his study in the International Journal of Peace Studies published via the School for Conflict Analysis and Resolution at George Mason University, finds that USIP ‘has been consistently used by US foreign policy elites as an instrument to counter the peace movement.’

“Research funded by USIP, Chaulia finds, functions: ‘… by projecting the US as a benevolent hegemon that unswervingly pursues peace in various conflict zones… USIP is an ideational weapon that subjugates the knowledge of the American peace movement on behalf of the state… by validating certain ideological strands of peace research as ‘objective’ while disqualifying others as biased or ‘unscientific.’’

“The goals of foreign policy departments are complex and do not preclude sponsorship of beneficial or critical projects, as long as they still fit the above ideological framework and goals…”

I also noted the most critical fact of all — that my Insurge piece had documented the specific biases of USIP in relation to the Iraq War:

“While USIP funding on issues unrelated to Iraq may well be much less politicized, this is demonstrably not the case on Iraq. As I document in my Insurge investigation, USIP was itself complicit in the illegal military occupation of Iraq, having established an office in Baghdad with Bush administration funding, through which senior USIP officers and representatives worked closely with the Coalition Provisional Authority, US military officials, and the US-installed Iraqi administration.

“That USIP has funded positive scientific peace research in areas unrelated to Iraq has no bearing on the fact that USIP’s entire relationship to Iraq is deeply and directly embedded in official US government goals — the idea, therefore, that USIP can be seen as a neutral research funder on Iraq is preposterous.”

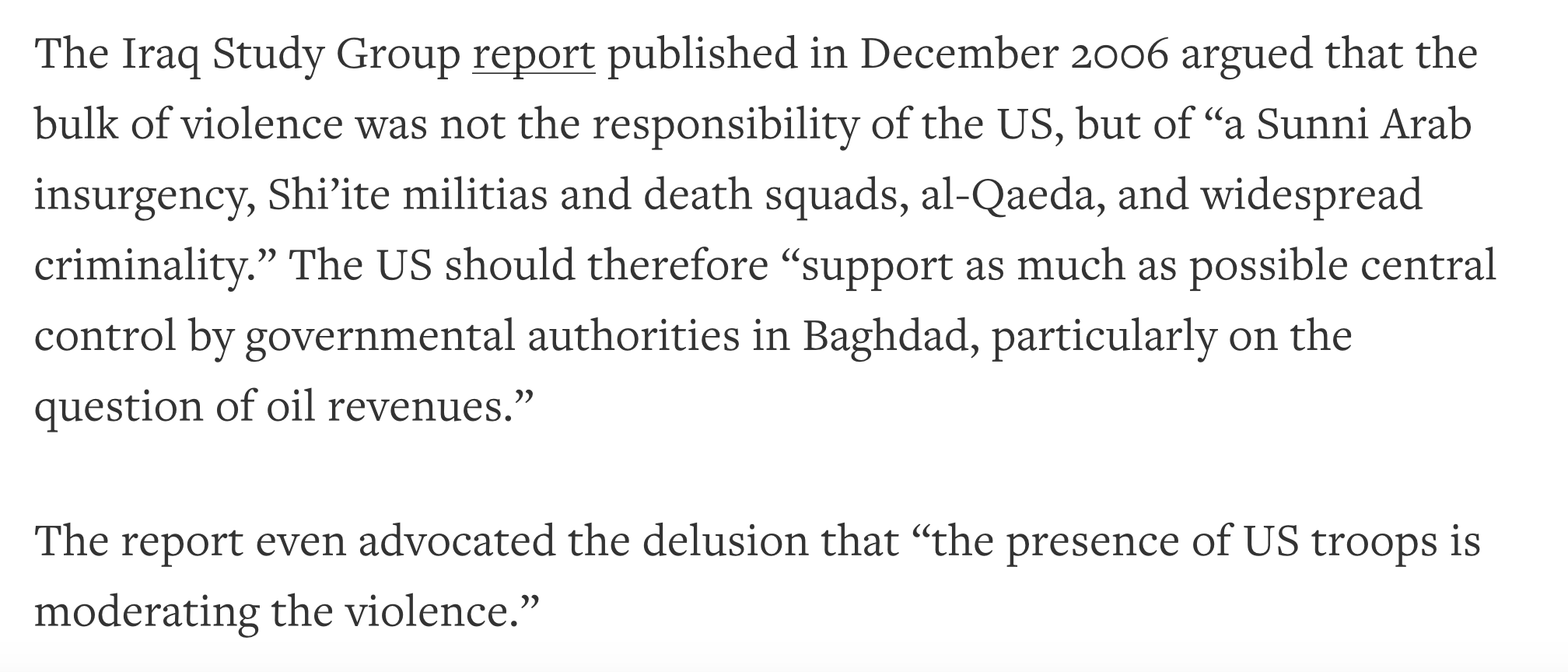

Dean also ignores the fact that USIP’s direct complicity in the military occupation of Iraq, which must of course raise legitimate questions about USIP’s capacity to be an objective grant-funder on the Iraq issue, was also associated with a demonstrated bias with respect to assessing the Iraq War death toll. As I also wrote:

“… the very specific evidence I report from the USIP-convened Iraq Study Group project on the question of casualty counting, demonstrating that USIP’s priorities undermined and whitewashed the task of assessing US forces’ role in violence.

“This is clear empirical evidence of USIP’s ideological bias on the issue of casualty counting and responsibility for violence in Iraq. For USIP, attacks on US soldiers were being insufficiently counted, US violence in Iraq against Iraqi citizens did not meaningfully exist — and more focus needed to be placed on sectarian violence in Iraq as a cause of violent deaths.”

Here are some screenshots from my original Insurge piece where the evidence of this is discussed in relation to the Iraq Study Group, which was convened by USIP in 2006, and whose report was published by USIP late that year:

And also:

Dean doesn’t even acknowledge this. I suppose this means we can assume he’s quite happy, then, for IBC to be associated, through its directors, with a US government backed agency ideologically predisposed to sanitize US violence in Iraq.

Nothing to see here.

Conflict of interest? What conflict of interest?

Another example of Ahmed’s selective treatment of the “evidence” is provided when he cites the 2013 UCIMS epidemiological study (published in PLOS Medicine) as “corroboration” of the 2006 Lancet Iraq survey, but fails to mention that its estimate for violent deaths is far closer to (ie approximately matches) IBC’s figure. This fact alone tends to undermine the central premise of Ahmed’s article, since if IBC’s role is to “whitewash war-crimes” (Ahmed’s words), why would its violent-causes body count be in approximate agreement with the PLOS study (which was co-authored by Gilbert Burnham and vaunted as a methodological improvement on Burnham’s 2006 Lancet study)?

Once again, Dean ignores the following argument in my original Insurge piece:

“The new 2013 PLOS Medicine survey corroborated the 2006 Lancet survey’s higher estimates, concluding that 461,000 excess deaths had occurred in Iraq from 2003 to 2011, two-thirds of which could be attributed to violence. The PLOS authors clarified that they believe this was a conservative underestimate

“A co-author of that study, Prof. Tim Takaro of Simon Fraser University’s Faculty of Health Sciences, was also a co-author of the new PSR report, which argued that the most accurate figure was probably between the 2006 Lancet’s estimate (for 2003–2006) and the 2013 PLOS estimate (for 2003–2011). The Lancet estimate, extrapolated up to 2015, imply nearly 1.5 million violent deaths.

“Takaro et. al thus concluded that the true death toll from armed violence would lie somewhere between that higher end, and the more conservative PLOS findings. Incorporating excess deaths from indirect impacts of the war back into this estimate would lead to an overall estimate of just over a million Iraqi deaths, which the PSR authors stress itself remains a conservative figure.”

So a co-author of the 2013 PLOS study argues that its death toll findings corroborate the Lancet study, and criticizes the IBC’s death toll estimate. But nevermind, let’s just believe Brian Dean, the news frames expert.

As for my reference to whitewashing war-crimes, this is a specific reference to the casualty metric created for NATO using the IBC database, by IBC-affiliated authors including its directors, which according to the Human Rights Data & Analysis Group (HRDAG) is likely to systematically overlook ‘dirty war’ incidents against civilians. Dean fails to show that HRDAG’s analysis of the metric created by IBC’s directors and researchers is incorrect. More on that in a bit, too.

“Tapestry of connections” (aka conspiracy)

Ahmed attempts to implicate IBC and “IBC affiliated researchers” in what he calls a “tapestry of connections”. And he certainly weaves a remarkable conspiracy — his cast of characters includes “Senator Hugh Burns’ Fact Finding Committee on Un-American Activities”, “The Pentagon’s counterinsurgency geographer”, “Colombian paramilitary groups involved in drug-trafficking”, “Colombia’s state-run Central Bank”, “the Nazis” and “Nazi scientists” (among others). I struggled to see how there’s any connection between these and IBC, and, to be fair to Ahmed, he doesn’t imply there’s any real link with the Nazis. He simply mentions how the Nazis achieved success in the “abuse of science to legitimize war and sanitize death” — no doubt to give us some “precedent” for IBC’s work.

Most of this stuff is spun by Ahmed from “connections” to research by Professor Michael Spagat which has nothing to do with IBC (other than that Spagat has been an advisor to IBC). Ahmed attempts to (falsely) implicate IBC in these “connections”. For example:

“Spagat’s early career connections to IREX and NCEEER, both conduits for US State Department propaganda operations, as well as to Radiance Technology, USAID, and USIP, raise serious ethical questions, as well as questions about the reliability and impartiality of his work, and that of IBC.”

Dean perhaps has some trouble understanding English. The paragraph he cites here is about Michael Spagat, who has been associated with the IBC, and with other projects run by IBC directors relating to casualty counting, as an adviser, consultant and researcher. Spagat also authored the main critique of the 2006 Lancet Iraq death toll survey that I analyse at the end of my story.

Dean fails to understand that I’m not drawing conclusions solely from Spagat’s early career funding via IREX and NCEEER — grant-making institutions which are closely linked to the US State Department. I’m drawing conclusions about the fact that Spagat has been, throughout his entire career, in receipt of funding from sources linked to the US government and/or agencies with close links to the US government — all the way up to USIP. And that therefore, his association with IBC raises questions about IBC’s reliability and impartiality.

I am not suggesting that the funding received by Spagat in 1994 to 1996 from IREX and NCEEER had anything to do with IBC per se. Nevertheless, Dean goes on to write this:

Despite the obvious irrelevance of this to IBC, Ahmed asserts that it raises “questions about the reliability and impartiality” of IBC’s work.

Um, no I don’t, but if you quote repeatedly and entirely out of context, even English language night school won’t help you.

Ahmed goes into some detail on IREX and NCEEER, presumably to convince us that they are “intimately related to US government propaganda operations”. For example, NCEEER’s Board of Directors includes one Richard Combs, and, “from 1950 Combs was chief investigator, counsel and senior analyst for Senator Hugh Burns’ Fact Finding Committee on Un-American Activities”. Ahmed continues:

It’s interesting that Dean quotes paragraphs toward the end of my very lengthy original article, which are not even central to my findings, to “frame” his ‘refutation.’

The material on IREX and NCEEER, which Dean quotes small snippets of, provides well-documented information that contextualizes Spagat’s early career affiliations to institutions which played a key role in promoting State Department goals in Russia, namely, the transition to a capitalist market economy.

This type of tenuous guilt-by-association “logic” runs throughout Ahmed’s article. It’s the “logic” of smear. With similar rhetoric one could just as easily “implicate” the “heroes” of Ahmed’s piece. Gilbert Burnham, for example, has received research funding from the World Bank, the Afghanistan government, USAID (another agency which Ahmed depicts as nefarious), Procter & Gamble, etc — in addition to his collaborations with “neocon front agency” USIP, mentioned above (including on Iraq and Afghanistan). Imagine the “connections” here. What does all this say about “the reliability and impartiality” of Burnham’s work, including the 2006 Lancet Iraq survey? What does it say about the people he’s “affiliated” with?

Of course, it says very little by itself. It says even less when seen against Burnham’s work in its entirety. But that wouldn’t stop someone writing a rhetorical smear piece on Burnham by expanding on all the “suggestive”, “suspicious” connections in a misleading way — as Ahmed has done with IBC.

Once again, Dean misunderstands the simplicity of my argument. Burnham and others have received all sorts of funding from various government and other institutional bodies. What Dean fails to grasp is the relationship between funding received, and research being pursued.

I have made a very specific argument that Dean simply fails to address:

- IBC’s directors, since 2009, have received funding from a range of US and European foreign policy agencies, starting with USIP, for casualty counting related work.

- USIP in particular has a history of subservience to neoconservative ideology — a matter documented in several histories. I quote an example of a study on USIP’s subtle agenda of co-opting the peace movement from the peer-reviewed social science literature.

- USIP in particular has a history of deep partiality in relation to Iraq, was complicit in the occupation of Iraq, and convened the Iraq Study Group, which specifically demonstrated a bias on the issue of causes of violent deaths in Iraq that absolved US forces of culpability.

- Therefore, the receipt of USIP funding by IBC’s directors for casualty counting work since 2009 until today flies in the face of the IBC’s claims to be an independent anti-war body. How can IBC as a corporate entity be ‘independent’ when its company directors are also directors of nonprofit companies in receipt of core funding from Western foreign policy agencies for casualty counting work? Incidentally, as I noted in my original Insurge piece, John Sloboda himself in a speech before USIP disavowed his own anti-war credentials by insisting that he and IBC were only opposed to the Iraq War, but not necessarily any other war.

This, naturally, prompts the following question: How is the above similar to the funding received by Burnham? As far as I’m aware, Burnham has declared his sources of funding for his research in publications where appropriate. The IBC directors have not declared the government funding received by them in any of the scholarly papers published in scientific journals since 2009 until today.

Also, Burnham does not run an organization related to his research, which receives funding from a US government agency deeply involved in sanitizing the role of US forces in violence during the Iraq War.

Dean thinks I am trying to “smear” through guilt-by-association. That is not the case. This is not about guilt-by-association, nor about “villains” and “heroes” — Brian Dean’s framing, not mine.

It’s about being funded by the very US government foreign policy agency with a vested interest in downplaying its own role in devastating Iraq. It’s about using a sloppy methodology based on the IBC database to develop a death measuring metric that is being used by NATO to potentially undercount ‘dirty war’ incidents (i.e. war crimes) in conflict arenas like Afghanistan, as I will demonstrate below with reference to direct evidence already cited copiously that Dean pretends doesn’t exist.

The fact that Dean is incapable of recognizing or acknowledging any merit at all in these criticisms — unlike Gelman and HRDAG — illustrates what agenda is at work here.

Dean will not accept any criticism of IBC. IBC is basically God. Everyone who critiques IBC is engaged in ugly smearing and misleading rhetoric. Dean’s imaginary “hero” versus “villain” narrative is a frame of his own construction, not mine. IBC is the hero, and I’m the villain, hell-bent on attacking poor innocent IBC. And thankfully, Sir George Monbiot has waded in on Twitter to valiantly come to the aid of his friends.

One of the “connections” that Ahmed’s website detective work reveals regarding “IBC-affiliated” Professor Michael Spagat is the following:

In the acknowledgements to his 2010 critique of the Lancet study in Defence and Peace Economics, Spagat thanks Colin Kahl, indicating that a senior member of Obama’s Pentagon reviewed his manuscript before submission and publication.

Dean’s selectivity in his choice of quotes remains fascinating as ever. He hones in on my mention of Obama’s Pentagon official Colin Kahl, but omits to mention that I prove specifically that Colin Kahl was himself a proponent of lower death toll estimates of the Iraq War, and even went so far as to praise the US military’s conduct in Iraq as exemplary compared to previous wars.

So it’s not just that Kahl was a senior Pentagon official — it’s that he was a senior Pentagon official with a demonstrated interest in sanitizing the impact of the Iraq War on civilian life by minimizing the death toll.

Additionally, my critique of the conflicts of interest involved in the Defence and Peace Economics paper by Spagat are not simply about one person, but about several reviewers/editors for the journal, and about the journal itself.

As I document in the piece:

“The editor-in-chief responsible for receiving and publishing Michael Spagat’s paper on The Lancet Iraq mortality survey was Prof. Daniel Arce from the University of Texas, Dallas. He had recently received a grant, along with another journal editor Todd Sandler, from the US Department of Homeland Security for a project on ‘Terrorist Spectaculars: Backlash Attacks and the Focus of Intelligence.’…

“The journal’s founding editor and special editorial advisor is Prof. Keith Hartley of York University, who is also a longtime British Ministry of Defence (MoD) consultant. From 1985 to 2001, Hartley was special advisor to the House of Commons Defence Committee. He currently chairs the finance group of the UK Department of Trade and Industry’s Aerospace Innovation and Growth Team.

“During the period after the 2003 Iraq War, Hartley was on the Project Board of the Defence Analytical Services Agency’s (DASA) Quality Review of Defence Finance & Economic Statistics. DASA provides statistical services to the MoD, and its chief executive is also MoD’s head of statistics….

“Career MoD consultant Prof. Hartley believed strongly that the costs of the Iraq War outweighed its benefits. But he was also biased toward a heavily reduced casualty figure derived from the Iraq Body Count…

“In 2005 presentation slides on the costs of the war, Hartley cited only two estimates of the Iraqi civilian death toll: 12,800–14,000 and 10,000–33,000 civilians. The last figure was published in the Journal of the Royal United Services Institute (RUSI), a Whitehall think-tank that operates in close alignment with MoD policy.

“But Hartley’s first figure was reported by IBC as of 22nd September 2004. Hartley even rounded down IBC’s figure of 14,843 recorded deaths to 14,000.

“Hartley selected these low figures for Iraqi deaths despite the fact that The Lancet had the preceding year published the first major peer-reviewed epidemiological study estimating the total ‘excess death’ toll from the war, conservatively, at around 100,000 people.”

I also discuss in some detail the ideological outlook of the journal, which is fundamentally supportive of US hegemony and the essential benevolence of US foreign policy goals.

It’s a pity that Ahmed didn’t apply the same “investigative” technique to the UCIMS/PLOS Iraq study (the one that was co-authored by Gilbert Burnham and vaunted as an improved update to the 2006 Lancet study). He would have found that a certain Skip Burkle “reviewed the manuscript” of that study “before submission and publication”. Burkle was appointed by the Bush administration as Interim Minister of Health in Iraq in 2003. (Of course, one could probably also find things in Burkle’s favour, but why bother if the main purpose is to list a series of “connections” to warmongers).

As usual with Brian Dean, his comparisons are wide off the mark. I identified Colin Kahl as a senior active Pentagon official with a demonstrated bias on the issue of the Iraq War death toll, and the violence wrought by US forces in Iraq.

Dean’s tit-for-tat response, like an insolent child rather than a person engaging in meaningful debate, counters with the following: Burnham’s study was “reviewed” by Skip Burkle, who was Interim Minister of Health in Iraq in 2003.

But unlike Kahl, who was appointed personally by President Obama, Dean conveniently omits to mention that Burkle’s appointment was cleared under Jay Garner’s tutelage, who was a senior administrator at USAID. Dick Cheney and other Bush administration officials did not really know who he was. After just one week in his job as interim health minister, he received an email from a USAID official saying the Bush White House wanted a “loyalist” in the job. He was promptly replaced by Bush “loyalist,” James Haveman (whose health policies for Iraq were disastrous, by the way).

Notably, as Jonathan Steele and Suzanne Goldenberg reported in The Guardian some years ago, Burkle — a leading Harvard public health expert himself — believed that The Lancet’s 2006 death toll estimate was very conservative:

“One expert also believes the number of civilian casualties may be higher than the Baltimore/Lancet figure. Frederick ‘Skip’ Burkle is a professor in the department of public health and epidemiology at Harvard University who ran Iraq’s ministry of health after the war but was sacked by the US and replaced by a Bush loyalist. He says the survey ignored the occupation’s indirect or secondary casualties — deaths caused by the destruction of health services, unemployment and lack of electricity. Two surveys by non-government organisations found a rise in infant mortality and malnutrition, he notes, so why are those figures not reflected in the second study that appeared in the Lancet?”

Continuing with the “investigation” into a “tapestry of connections”, what could we say about Gilbert Burnham’s Afghanistan study, whose “assessments were funded by the Ministry of Public Health through grants from the World Bank”. A ZNet article from 2009 made an interesting observation about this study:

A case in point is Afghanistan, where the war dead are measured “only” in the thousands, and where the “excess deaths” calculation can be interpreted as favouring the NATO invasion, if numbers are taken to be the sole criterion. For example, a Johns Hopkins University study (run by Gilbert Burnham, co-author of the 2006 Lancet Iraq survey) found lower infant and child mortality rates, due to improved medical care, following the invasion. The implication here is that the number of lives saved exceeds both tallied and estimated death tolls from the fighting.

I see nothing wrong with asking such questions about Burnham’s Afghanistan research, of which I know little. My story was an investigation into IBC’s methods, and whether the criticisms of the 2006 Lancet death toll survey by IBC-affiliated researchers were valid. I found that the IBC’s methods are deeply compromised and flawed — according to leading statistical experts — and that criticisms of the Lancet study from people affiliated to IBC were largely unfounded.

Dean can’t seem to get his head round the idea that this isn’t a personality battle. I note that Burnham’s Afghanistan study does not appear to have been published in a scientific journal. This doesn’t mean it is incorrect, but does mean it didn’t go through a formal peer-review process.

And certainly, funding from the Afghan Ministry of Health — which as Prof Marc Herold has shown (and I cite him copiously in my Insurge critique of IBC) has a fairly dismal record itself — raises perfectly legitimate questions about the integrity of the research.

The problem is that Dean is either lying, or plain dumb. Burnham’s Afghanistan study was not about mortality rates as such in Afghanistan.

But to prove otherwise, Dean quotes not the study, but a “ZNet article from 2009”. That article is by Robert Shone, another longstanding IBC supporter, who claimed via ZNet that Burnham’s Afghanistan study “found lower infant and child mortality rates, due to improved medical care, following the invasion.”

But Shone doesn’t appear to have even read Burnham’s paper. Instead, he offers the following footnote: “A National Journal article claimed that Burnham’s Afghanistan study shows that an ‘estimated 89,000 infants per year’ are saved by medical improvements, and that this figure ‘far exceeds the estimates of people reported dead in the fighting between the government and the Taliban’.”

Yes, this is the very same National Journal article that I critique in my original piece, and which makes a number of false claims and unsubstantiated insinuations against the 2006 Lancet Iraq death toll study.

I had a look at the actual Burnham Afghanistan study. It is not a study of mortality rates in Afghanistan. It is not a study of the Afghan death toll due to the war. It is a study to measure the performance of Afghanistan’s health services, using a tool called the ‘Balanced Scorecard.’

The study found that from 2004 to 2006, there have been major improvements in health service delivery. The study does not anywhere imply that these findings mean that NATO did not kill Afghans during the war. It does not even in any sense compare estimates of lives saved due to improved access to healthcare, with estimates of people who reportedly died due to the direct and indirect impacts of war. It also does not anywhere suggest that improved medical care has reduced the number of casualties from NATO’s war in Afghanistan.

Shone is simply bullshitting, and Dean is swallowing it like a kid at a candy store.

So too, it seems, is George Monbiot.

So, if we used a distorting, exaggerating, overheated rhetorical style, we would say that Burnham’s study (funded by the World Bank and a US puppet-government) “whitewashed” the crimes of US/NATO occupiers in Afghanistan by hawking the “life-saving” (in “excess deaths” terms) and “health-improving” benefits and “improvements” of bloody military intervention imposed by imperialist Western foreign policy.

I think the grouping together of the words “distorting, exaggerating, overheated rhetorical style” to describe my article is kind of like the pot calling the kettle black, no?

This is doubly ironic considering Burnham’s — and Ahmed’s — critical comments on so-called “passive surveillance” (a common misnomer for the journalistic “surveillance” used by IBC-type projects). This approach to casualty recording (eg as utilised by Professor Marc Herold) more or less showed the bloody mass slaughter in Afghanistan for what it was, in contrast to the epidemiological “excess deaths” whitewashing calculation, which “scientifically demonstrated” — with statistical “number-crunching” — some spurious “net benefit” of war crime.

If Burnham’s Afghanistan study is wrong, I don’t think this disproves the entire science of epidemiology.

It is not at all clear, however, that Burnham’s Afghanistan study is wrong.

More to the point, Burnham’s Afghanistan study was basically an application of a performance measurement tool to assess basic health service delivery in Afghanistan. It did not attempt to study the Afghan death toll due to the war. It did find significant improvements to certain elements of the healthcare system. Whether or not the study’s findings were accurate, neither Dean nor his source, Shone, prove anything about the study (which they have clearly not even tried to read, let alone understand); and their claim that the study assessed the number of Afghans who had died due to the war would be sort of funny — if it wasn’t so horrifyingly pathetic.

It is also ironic that Dean decides to cite Marc W. Herold, the economics professor who has used passive surveillance techniques to document Afghan deaths due to war. As readers of my original piece will be aware, while IBC affiliated authors Spagat and Hicks were publishing pseudo-scientific papers that created a metric subsequently used by NATO to claim that a change in military strategy had led to a marked decline in deaths of Afghan civilians, Marc Herold was arguing that deaths of Afghans had actually increased.

But who cares? According to Dean, this work had nothing to do with IBC even though it was performed by two IBC scholars, one of whom was then an IBC director.

Continuing with the Ahmed-style rhetoric, we could add the fact that Skip Burkle (named Interim Minister of Health in Iraq in 2003 by Donald Rumsfeld and Dick Cheney, as mentioned above) is a longtime associate of Burnham (whose Afghanistan research has been repeatedly funded by the Afghanistan Ministry of Public Health) and that Burnham has collaborated with USIP (the “neocon front agency”) on its 2007 “Rebuilding a Nation’s Health in Afghanistan” symposium, and also that the Johns Hopkins Bloomberg School of Public Health (Burnham’s school) has continually collaborated with USIP on “conflict research” (including Afghanistan and Iraq) — and you have a “tapestry of connections” (or something) which certainly looks “deeply embedded in the Western foreign policy establishment”.

Or, rather, you don’t. What you have is a relatively innocuous bunch of facts which can probably be woven into something by someone who cherry-picks and embroiders with rhetoric — with the purpose of discrediting the target of that rhetoric.

So yes, let’s compare, shall we. Re-read above — Burnham, whose Afghanistan study basically says very little about the Afghan death toll due to the war; Burkle, interim health minister, who was sacked by the Bush administration for not being “loyalist” enough after one week; Burnham has received some USIP funding, as have many other conflict experts doing laudable work, although not for the Lancet studies.

Let’s compare that to the network around the IBC. Here you have John Sloboda and Hamit Dardagan as founding directors of the IBC, and since 2008 as directors of a nonprofit company, Conflict Casualties Monitor, through which they run IBC. In the same period, Sloboda and Dardagan organized a casualty counting project at the Oxford Research Group, which eventually spawned its own nonprofit company, Every Casualty, both which received core funding from USIP since 2009, under Sloboda’s watch. Every Casualty has also received funding from the Swiss foreign affairs ministry, the Norwegian foreign affairs ministry and the German foreign affairs ministry.

USIP, apart from its neoconservative leanings, more disturbingly has a demonstrated bias on the politics of the Iraq War, was directly complicit in the occupation of Iraq, and demonstrated a specific bias toward understating the violence committed by US forces against Iraqis.

We also have, in this same period, Sloboda and Dardagan working closely at IBC, ORG and Every Casualty, with IBC director Madelyn Hicks and IBC advisor Mike Spagat. All of them have been co-authors of scientific papers in which they utilize the IBC database to make a range of claims that have been criticized by world renowned statistical experts like HRDAG for being fundamentally unscientific.

Some of this work has involved creating a casualty estimation metric used by NATO, which HRDAG criticizes for its potential to obscure and minimize ‘dirty war’ incidents (hence my phrase, which really pisses off Brian Dean, “whitewashing war crimes”). HRDAG also criticizes IBC’s methodology more generally for being inherently biased toward overlooking certain types of deaths.

False claims about IBC’s funding

Careless readers of Ahmed’s rhetoric might come to the conclusion, as someone posting to Twitter did, that IBC has “dodgy imperialist military funding sources”…. These claims are false. Ahmed does not demonstrate that IBC (or “IBC’s output”) is “financed by” or “performed under the financial and organizational influence of” these interests. Instead, he documents some funding of projects undertaken by the Oxford Research Group (ORG) and others “affiliated” to IBC.

I didn’t make claims about IBC’s funding. I made claims, documented with evidence, about the funding received by all the key people associated with the IBC at a senior level, organizationally as directors, and academically in terms of being involved in academic publications in journals by IBC-linked authors.

Dean powerfully defeats the straw man of a Twitter post where someone said that IBC has “dodgy imperialist military funding sources” — something I never claimed. But by using this to “frame” his missive, he misleads his readers by mischaracterizing my story.

Ultimately, he fails to address the substantive issue, which is that IBC directors organized and received such funding from US and European government foreign affairs agencies for parallel casualty recording work. That is a conflict of interest.

Clearly, Dean is quite comfortable with that.

He has every right to be. I think that says more about him than it does about me. And perhaps, it says something about Monbiot, too, since he gives Dean such a resounding endorsement from his respected position as a longtime Guardian columnist.

These examples are as close as Ahmed gets to supporting his claims of “financial influence” on IBC — and they are not very close. Let’s look at each instance, starting with the most recent. Every Casualty was initially an ORG project (initiated in 2007) and then became an independent charitable organisation from October 2014, with co-directors Dardagan and Sloboda. Its work is separate — and separately-funded — from IBC, although, as Ahmed notes, IBC is listed as a member of its ‘International Practitioner Network’ (or ‘Casualty Recorders Network’ as it’s now named).

The financial and organizational influence of the Western foreign policy establishment over IBC is through the funding provided to IBC’s directors, Sloboda and Dardagan et. al, for parallel casualty recording work, much of which overlaps and makes use of IBC’s work. They, along with other IBC affiliated researchers like Spagat and Hicks, have actively promoted the idea that the IBC database provides an accurate picture of the Iraq War death toll, despite the fact that it is widely recognized in the social science literature that it is impossible for passive surveillance techniques to ever provide a complete picture of conflict impacts and civilian deaths.

Sloboda and Dardagan have actively sought to build on their IBC credentials to promote their flawed methodology as a general approach to estimating casualties, through the Every Casualty programme first run at ORG, and later set-up as its own organization. This work receives funding from the US government through USIP, the Swiss foreign ministry, the Norwegian foreign ministry, and the German foreign ministry.

It is a matter of record that all four of those governments were supportive of the Iraq War (obviously the US was the key instigator) — even the German government, which despite its public opposition and private concerns, helped pave the way for the Iraq War and then, as noted by Tobias Pfluger, then Member of the European Parliament sitting on the EU Parliamentary Committee on Foreign Affairs, provided active military and logistical support for the war, short of sending troops. And as noted already, USIP was directly linked to Bush administration efforts to consolidate the occupation, and sanitize the impact of US violence.

Brian Dean wants us to believe that it is possible to separate the actions and interests of the directors of an organization from the organization itself. Sadly, it isn’t. Throughout his ‘refutation’, he scoffs at the strange idea that one might raise questions about IBC as an organization based on the systematic behavior, research and publications of its own directors.

The fact, which Dean keeps dodging, is that since 2009, IBC director Sloboda et. al has been funded by the very same US, and later European, government agencies complicit in the crimes of the Iraq War.

Ahmed doesn’t mention the other members of Every Casualty’s peer network (apart from CERAC), so I’ll put this particular “connection” to IBC in perspective by listing all of them: … [Dean proceeds to list them all (ed.)]

Ahmed fails to produce any evidence that IBC’s output (which, of course, started in 2003) has “been performed under the financial and organizational influence” of “powerful vested interests” (eg as a result of IBC being one of the many members of the above peer network).

IBC’s membership of the International Practitioner’s Network or Casualty Recorder’s Network is mentioned in my piece at the end of a discussion about the funding context. I do not suggest that this membership in itself proves that IBC’s output has been performed under the financial and organizational influence of powerful vested interests. Here is the context:

“Every Casualty now lists its core funders as USIP, the Swiss Federal Department of Foreign Affairs, the German Federal Foreign Office, and the Norwegian Ministry of Foreign Affairs.

“Despite Switzerland’s reputation for neutrality, all three European governments have supported US foreign policy in Iraq. Even Switzerland, in 2007, came under fire after revelations the government had sold arms destined for Iraq through Romania.

“The IBC, of which Sloboda and Dardagan remain directors, is listed as a member of Every Casualty’s ‘International Practitioner Network,’ along with the Conflict Resource Analysis Center (CERAC), an organization founded and set-up by Michael Spagat in Bogota, Colombia.”

The way Dean talks about IBC, anyone would think IBC had nothing to do with Sloboda and Dardagan. The fact is that Sloboda and Dardagan are at once, directors of Every Casualty, funded as above by the very governments complicit in the Iraq War, and simultaneously directors of IBC, a purportedly independent anti-war outfit, which is also among the members of Every Casualty’s network.

Dean is happy with that. I’m not.

Ahmed doesn’t provide any links when he mentions the funding by the “US government-backed agency” (ie USIP) of ORG’s “two-year initiative that ran from 2012 to 2014″ — perhaps because of the obviously innocuous nature of this work and the fact that not a single penny of the USIP funding went to IBC.

Once again, Dean strikes out against a straw man of his own concoction. I did not, in fact, argue that USIP funding went to IBC. I argued that USIP funding went to IBC’s directors for parallel casualty recording research. Moreover, I point out that this parallel casualty recording research was undertaken at an organization which itself had a longstanding partnership with IBC since inception.

But, as it happens, Dean’s unnecessary denial appears to be a Freudian slip, as we will see below.

As for IBC being a “partner” of “ORG’s USIP-funded ‘Recording the Casualties of Armed Conflict’”, it’s worth citing the entire section which Ahmed refers to (from ORG’s Annual Report, year-ending December 2010). Ahmed doesn’t provide a link to this, but there is a scan available of the document. The paragraph on IBC is actually about IBC’s work with Wikileaks.

USIP began funding ORG’s Every Casualty programme under Sloboda and Dardagan’s watch in 2010. During this period, IBC was described in ORG’s Annual Report as “one of our partners.”

Dean once again seeks to mischaracterize this issue, by pretending that IBC’s partnership with ORG was exclusively related to IBC’s “work with Wikileaks.”

But we know that funding received by Sloboda and Dardagan under the various ORG casualty recording programmes has supported IBC’s work since early on. IBC’s 2005 dossier of Iraqi civilian casualties, by Sloboda and Dardagan et. al, was published by ORG.

This lucrative partnership continued until fairly recently, with ORG providing further funding to IBC for research. In Dardagan’s 2013 report on Syria casualties for ORG’s USIP-funded Every Casualty programme, for instance, he confirms:

“In order to extract additional value from their hard-won information, ORG commissioned Conflict Casualties Monitor, the UK company that runs the Iraq Body Count (IBC) project, to undertake a new analysis focusing on victim demographics, along the lines of earlier models of such work by IBC (2005), including in collaboration with others as published in the New England Journal of Medicine (2009),13 PLOS Medicine (2011)14 and The Lancet (2011). For the purposes of this study, the Syrian databases were combined into a single data set suitable for quantitative analysis.”

This makes for an interesting set-up, as far as conflicts of interest go. Dardagan and Sloboda in 2013 were running ORG’s Every Casualty programme. They received funding from USIP, the German foreign affairs ministry, among others, in that capacity.

So that year, Dardagan and Sloboda, via ORG, basically commissioned themselves, through IBC, to do research at ORG on the inherently limited sample of civilian deaths collected by various Syrian monitoring groups in ORG’s practitioner network, and to then make various flawed statistical generalizations about those deaths using a methodology criticized as misleading by the world’s leading experts on conflict statistics at HRDAG.

Meanwhile, we are to keep believing, as per Dean’s repeated intonations, that not a single penny of funding from the US and European government funders of ORG ever went to IBC.

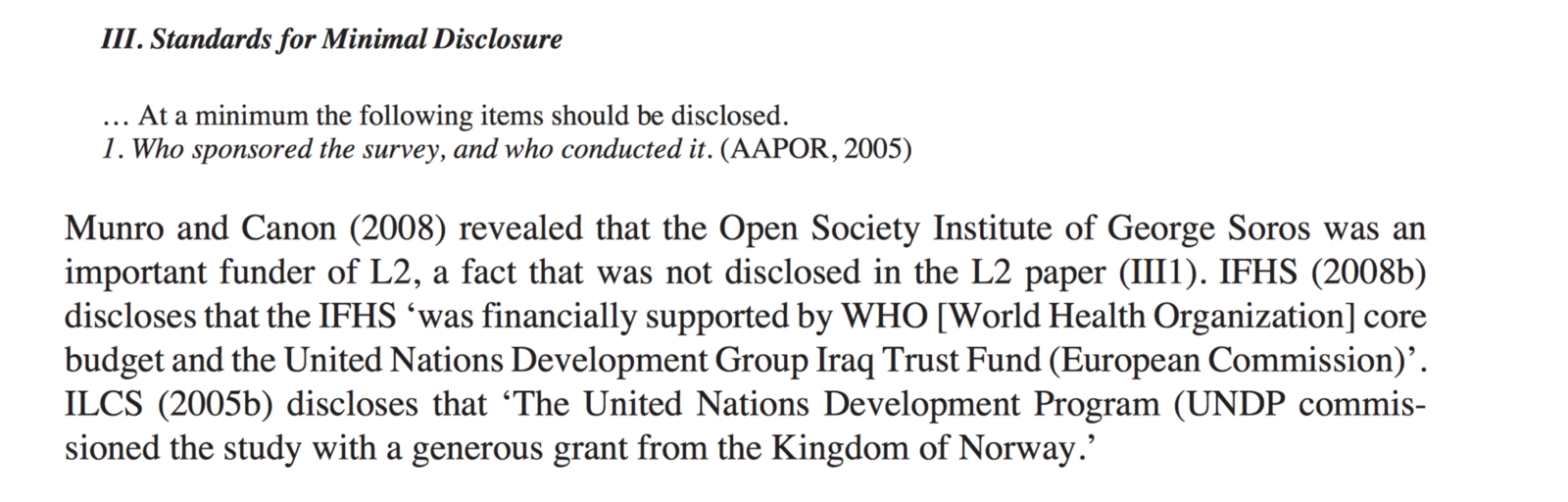

Whether or not that is the case (and I did not actually make that case in my Insurge piece at all) it is also worth noting here that Dardagan’s report specifically acknowledges that several scientific publications which I have raised questions about in terms of conflicts of interest, were facilitated by the ORG-IBC partnership. Yet, in a severe ethical breach, in none of those publications do the authors acknowledge any of the research funding received by this partnership from US and European foreign policy agencies.

In his eagerness to avoid the awkward questions this raises, Dean then proceeds with the following irrelevant rambling:

Recall that Wikileaks’ Julian Assange and IBC’s John Sloboda spoke together at the press conference covering the Iraq War Logs. Assange commented: “Working with Iraq Body Count, we have seen there are approximately 15,000 never previously documented cases of civilians who have been killed…”. What can we infer from this? Perhaps Julian Assange, in this instance, “performed under the financial and organizational influence” of USIP? There’s certainly a “link”, so it’s possible. And if we follow the conspiriological mode of inference, then it’s surely our moral obligation to conclude that this remote possibility is a definite certainty which should be “exposed”. All in the name of “investigative journalism”.

Julian Assange does not receive funding from USIP. Sloboda does. USIP has a demonstrated pro-Iraq War bias.

Dean, and it seems Monbiot, would rather we not reflect on this.

Conspiriologically transferable “conflicts of interest”

As we’ve seen from his (IBC-accusing) inference about 1990s grants to Spagat from NCEEER and IREX, etc, and from his (IBC-accusing) inferences about USIP funding for ORG/’Every Casualty’, Ahmed’s reasoning is based on a premise that the perceived taint, or “compromise”, from a given piece of funding is transferable to research with completely different funding — even to research from different decades, on different countries and topics, or by different people. As long as some association or “connection” can be asserted, then a perceived “conflict of interest” can be (and should be) transferred.

No, this is another construct of Dean’s quite vivid imagination. I don’t mention Spagat’s early career NCEEER and IREX funding in isolation, but in relationship with his rather seamless receipt of funding from US government agencies or from institutions linked closely to the US government, including Radiance Technologies (a US defence contractor which ran the US Army’s counterinsurgency programme in Latin America) for Colombia research; USAID for Colombia research (USAID being another big, complicated institution which has, however, had a bad and very politicized track record in Colombia, as I document in the piece) etc. etc.

At one point in his missive, Dean balks at my portrayal of USAID as a “nefarious” institution. This is another absurd oversimplication — as if raising legitimate criticisms of an institution means that we are calling that institution wholly nefarious. The “framing” once again is more revealing of Dean’s mindset toward criticism of power as “conspiracy theorizing.”

Interestingly, Dean chooses to ignore the fact that Spagat’s (US defence contractor and government-funded) statistical fraud to undermine civilian deaths in the Colombia conflict was exposed and criticized by Human Rights Watch.

Unfortunately for Brian Dean, this dubious track record does, indeed, raise legitimate questions about the integrity of Spagat’s research, especially his work analyzing conflict databases.

Dean follows with a very long and rambling missive on the question of research funding. He attempts to obscure the point of what I said in response to Gelman.

It’s curious, then, that in Ahmed’s follow-up piece, in which he replies to his critics (including statistics professor Andrew Gelman), he writes the following:

In this case, the fact that Gelman at some time in his career received some NSA funding for some specific research is neither here nor there — it reveals little if nothing about the general validity of his research on statistics, and certainly the same applies to his views on the Iraq question.

Firstly, one might ask how Ahmed can know for certain — without a thorough “investigation” — that Gelman’s NSA funding wasn’t as relevant to the “general validity” of his work as Spagat’s NCEEER funding (for example) was to the “reliability and impartiality” of IBC’s work? In a moment of temporary reasonableness followed by spectacular falsehood, Ahmed then writes:

The point here is that Gelman would be under obligation to have disclosed his funding to publications with respect to the specific research being funded and published, in order for readers and evaluators of the researchers to be aware of the relevant context.

This is precisely what didn’t happen in relation to all of the peer-reviewed publications put out by the IBC team and those associated with the IBC, Spagat included. In not a single one of those publications did they disclose that a significant portion of funding for their conflict research, including specifically on Iraq, came from US and European government agencies which happen to be closely linked with foreign policy.

…. This, of course, would be a huge and serious falsehood. To clarify, I asked Ahmed to “please list the IBC publications which you think were funded by these agencies, so that I can check directly with IBC to see if your assertion is true”. His response was defensive and failed to answer my question:

This may be challenging for you to understand, but by “their conflict research” I did not mean the singular publication in question, but “their conflict research.”

His phrase, “their conflict research” (eg on Iraq), was as “specific” as he would get. It could, of course, refer to the ORG or ‘Every Casualty’ research, whose funding was openly disclosed by the publications of those groups.

Here, Brian Dean demonstrates how deeply confused he is about my actual argument, and about the ethical issues surrounding research funding.

Dean points out that ORG’s and Every Casualty’s funding was “openly disclosed by the publications of those groups.” I don’t dispute that. That’s not what my article addresses. I’m not talking about the publications “of those groups.” I’m talking about the publications by IBC authors in scientific journals.

I stated, as Dean acknowledges:

“This is precisely what didn’t happen in relation to all of the peer-reviewed publications put out by the IBC team and those associated with the IBC, Spagat included.”

Dean appears to confuse disclosure in “peer-reviewed publications” with organizational disclosure of institutional funding. Sure, ORG and Every Casualty have disclosed their funding from US and European governments. That’s obvious. How else would I be able to point to the funding?

The problem is that “the IBC team,” in their papers published in academic journals, do not disclose this funding in those publications.

Ahmed continued:

Do you dispute that throughout the period in which these IBC-linked publications emerged, IBC researchers who authored them have received funding for their conflict research from USIP and other government agencies?

This should indeed be disputed, as it’s misleading — aside from the fact that it’s irrelevant to whether funding for “the specific research being funded and published” (Ahmed’s wording) was disclosed (it was).

As I’ve kept repeating ad nauseum, no one is disputing whether the funding, generally speaking, was ever in some form “disclosed.”

The issue is whether the funding received by IBC authors was disclosed to and within the scientific journals in which their research was published.

What Dean is trying to argue here, what Monbiot is endorsing here, is that it doesn’t matter if Sloboda and Dardagan failed to declare funding they received from the pro-Iraq War foreign affairs agencies of the US and some European governments, when they were publishing these papers in journals which drew on their IBC work.

It does matter.

The main period in which “IBC researchers” (eg Sloboda, Dardagan, Hicks) received such funding (ie for Every Casualty, 2012–2014) doesn’t coincide with their published “IBC-linked” journal output (2009–2011, mostly in 2009). Of course, Ahmed’s wording is so vague (“their conflict research”) that he might argue there’s a bit of overlap somewhere (eg with some ORG funding in 2010). But his statement, “throughout the period in which these IBC-linked publications emerged”, is misleading. Note, also, the fairly obvious fact that IBC’s ongoing work (not their journal publications) began in 2003, a decade or so before this non-IBC funding is supposed to have “influenced” IBC’s output.

Dean does not shy from blatant falsehoods in his bizarre efforts to exonerate the IBC’s directors and associates.

From October 2009, IBC’s founding director John Sloboda was in direct receipt of funding from USIP to run the casualty recording programme at ORG. USIP gave him $100,161 for the following:

“A grant to define and test a generalizable framework for enumerating the casualties of armed conflict. Drawing on a newly-created international network of casualty recording practitioners, the initiative will produce a report that evaluates findings, raise the quality of data on the casualties of armed conflict, and professionalize the conduct of casualty recording. (USIP-009–09F) $100,161.”

ORG’s Annual Reports from 2010 onwards confirm that further core funding was received for this work in the ensuring years. By way of reminder, from 2010–2012, IBC adviser and researcher Michael Spagat received a grant from the same ORG programme, funded by USIP and other pro-Iraq War government agencies. There was no disclosure of this funding in the academic and scientific publications put out by Sloboda, Spagat, et. al in this period.

Here is a list of academic publications by IBC authors, most of which were already cited in my original piece, where there is no disclosure of the fact that the authors are in receipt, contemporaneous with the time of the article publication, of funding from US and some European government foreign affairs agencies that happen to have been complicit in the Iraq War:

http://www.tandfonline.com/doi/abs/10.1080/10242690802496898 (2010) (Spagat)

http://onlinelibrary.wiley.com/doi/10.1111/j.1740-9713.2010.00437.x/abstract (2010) (Spagat)

https://ojs.ub.uni-konstanz.de/srm/article/view/2373 (2010) (Spagat)

http://journals.plos.org/plosmedicine/article?id=10.1371/journal.pmed.1000415 (2011) (Hicks, Spagat, Sloboda, Dardagan)

http://www.thelancet.com/journals/lancet/article/PIIS0140-6736(11)61023-4/abstract (2011) (Hicks, Dardagan, Spagat, Sloboda)

http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0023976 (2011) Hicks and Spagat

http://www.thelancet.com/journals/lancet/article/PIIS0140-6736(12)60062-2/references (2012) Hicks, Dardagan, Spagat, Sloboda

John Sloboda, Hamit Dardagan, Michael Spagat, and Madelyn Hsiao-Rei Hicks, “Iraq Body Count: A Case Study in the Uses of Incident-based Conflict Casualty Data Aggregate Conflict Casualty Data” in Taylor Sebolt et. al, Counting Civilian Casualties (2013)

Ahmed repeatedly slams “IBC researchers” with accusations of “undisclosed research funding”, “conflicts of interest” and “breach of ethics” regarding their journal publications. But his accusations in this regard are typically couched in vague and non-specific rhetoric. The journal-published material by “IBC researchers” is easy enough to list and access, as is the openly-disclosed funding for ORG and Every Casualty, etc. If the journal publications (mostly from 2009) were guilty of “egregious ethical breaches”, as Ahmed asserts, then it would be easy enough to check and confirm (or, rather, refute) — given specific claims which he doesn’t provide.

In my earlier response to Gelman and HRDAG, I wrote:

“In all of their research outputs, neither Spagat nor his colleagues at IBC ever chose to declare to the journals in which they published their funding sources and funding affiliations to USIP, US military, and European foreign policy agencies related directly to the subjects on which they happen to be publishing.”

Given that the Johns Hopkins Bloomberg School of Public Health has a “close institutional relationship” with USIP (arguably “closer” than “IBC executives” have — as mentioned above), including collaborations on conflict research (Iraq, Afghanistan, etc), perhaps all Johns Hopkins “linked” publications should declare a conflict of interest?

I have no doubt whatsoever that legitimate questions can be asked about the funding and institutional relationships of a whole range of university departments. I, and many of my academic colleagues, routinely express such concerns in conversations about university politics.

In this case, though, the analogy is specious. Dean points to an entire university department, the Johns Hopkins Bloomberg School of Public Health, and says that it has a “close institutional relationship” with USIP.

So USIP has funded several projects at Johns Hopkins. Questions about this research may or may not be legitimate, but Dean cites no compelling basis to suggest that the projects are flawed, and that these flaws correlate with financial patronage by USIP due to USIP’s ideological biases.

But USIP and three European governments have funded Sloboda et. al. USIP and all three of those governments are demonstrably pro-Iraq War. USIP itself has a reasonably decent budget and funds all sorts of things — some of which is indeed valuable, but all of which generally fits the parameters of what a paper in the International Journal of Peace Studies describes as an effort by the US government to subtly co-opt the peace movement.

But I guess Brian Dean’s position is clear.

He does not believe that there is any conflict of interest present when the directors of an organization that claims to be opposed to the Iraq War, are themselves funded by the foreign affairs agencies that were complicit in the Iraq War.

Clearly, Monbiot agrees.

Good for them. Not so good for dead Iraqis, though.

Dirty War Index (DWI)

Ahmed makes a series of misleading claims in a section which even has a deeply misleading (not to say hysterical) title: “IBC’s new metric to whitewash war-crimes”.

I did mention that Dean is obsessed with the word “misleading” and uses it among other rhetoric to “frame” his ‘refutation.’

The “Dirty War Index” (DWI) which he discusses in this section is, in fact, not “IBC’s” in any sense. It’s a completely separate piece of work, by Madelyn Hsiao-Rei Hicks and Michael Spagat (published in PLOS Medicine in December 2008). I can’t see how anybody who reads the PLOS paper would seriously entertain the notion that it’s an extension of IBC’s casualty recording work — although, obviously, data from conflicts (whether from IBC’s database or from other sources, including epidemiological surveys) can be used as input to the DWI tool.

Dean “can’t see” because he is not looking — or, specifically, probably has not even read the paper (or having read it, doesn’t understand it). He fails to explain how the DWI is in any meaningful sense “a completely separate piece of work.” It isn’t. First off, the DWI was created by two IBC scholars, one (Hicks) a director of IBC, the other (Spagat) an adviser to IBC. Spagat was also a consultant to the ORG programme, which commissioned IBC research.

Secondly, the DWI was refined and applied with other IBC directors, Sloboda and Dardagan, to the IBC database. As usual, Dean cites selectively. He ignores this 2011 paper applying the DWI to Iraq, for instance, mentioned on the IBC website. This purported to offer clear trends regarding the nature of violence in Iraq, which ultimately downplayed the significance of US violence in war crimes. ORG, in 2013, confirmed that was essentially “work by IBC” performed “in collaboration” with these academics to generate journal publications (in itself a strange way of characterizing IBC’s collaboration with its own directors and consultants).

Dean’s next strategy really is priceless. He refers to the journal articles that I quote, which raise questions about the reliability of the DWI due to the unreliable nature of sources available on armed conflict.

He quotes from some of the same academics who, while criticizing the scope of the DWI, concede that it may well be useful in assessing, measuring and/or communicating certain aspects of a conflict.

The problem is that this doesn’t address my main criticisms of DWI, which Dean pretends don’t exist. My main criticisms are fairly lengthy, and based on the authoritative work of HRDAG, which conducts an extensive critique of the DWI as a whole, a critique so damning that it calls into question even the potential usefulness of the DWI tool suggested by the academics Dean cites without understanding the implications of their criticisms.

I’ll reproduce some of that critique that Dean hopes you won’t read in a bit, once again for eace of reference.

Of course, it doesn’t require Ahmed’s “investigative journalism” to point out the obvious fact that the usefulness of DWI depends on the quality and reliability of data used with it. Ahmed cites a study which claims that DWI relies on assumptions about the data utilised which are “seldom, if ever, met in a conflict situation”.

Then Dean goes on with his quotes.

Professor Nathan Taback (also cited by Ahmed) comments on a use for DWI originally proposed by Hicks and Spagat — namely, drawing attention to war-related rapes:

“Selection bias impedes the generalizability of a DWI, but the DWI could nevertheless be sufficient for policy or legal purposes. For instance, if a biased sample of rape victims has a rape DWI of 10%, then this information might, for example, be useful in planning a prevention program or gathering evidence for a criminal investigation, even if the magnitude of the bias is not readily quantifiable.”

Note the irrelevance of Taback’s point here to my actual critique. Taback admits that a biased sample of rape victims might give a high percentage DWI (he gives the example of 10%), which, he says, despite being inaccurate in terms of reflecting the actual scale of rape, might help to highlight the problem. Great.

That’s not what Sloboda et. al are trying to do with the DWI though. They are trying to draw sweeping generalized conclusions about trends in violence using the DWI, which they then want to pretend has no bias at all, even though, as Taback says, “the magnitude of the bias is not readily quantifiable.”

In a separate PLOS Medicine piece, Egbert Sondorp concludes as follows:

“[A] whole range of DWIs can be constructed, from rape to the use of prohibited weapons to the use of child soldiers, as long as acts counter to humanitarian law can be counted. The authors hope that the ease of use and understanding of DWIs will facilitate communication on the effects of war, with the ultimate goal being to moderate these effects, a similar aim to that of humanitarian law.”

Dean ignores Sondorp’s caveat — that DWIs can be constructed “as long as acts counter to humanitarian law can be counted.”

That precisely is the issue of contention. Does an IBC-style database based on passive surveillance (explained below) allow all “acts counter to humanitarian law” to be counted? The answer is, no. If that is the case, then DWIs cannot be meaningfully used to understand these phenomena in conflicts.

Dean ignores the entire large chunk in my piece where I refer to HRDAG’s damning critique of the DWI:

“If only 25% of civilian deaths and 75% of combatant deaths are recorded, for instance, then the DWI measure would under-measure the proportion of ‘dirty’ incidents by half the true proportion. Other types of under-recording could lead to worse levels of inaccuracy… The HRDGA team are particularly scathing on IBC’s favoured data sources.”

Here are the quotes I use from HRDAG:

“Passive surveillance systems, such as media reports, hospital records, and coroners’ reports, when assessed in isolation, lack even the benefit of a defensible confidence interval. Like most data about violence, these are convenience samples — non-random subsets of the true universe of violations. Any convenience dataset will include only a fraction of the total cases of violence… fractional samples can never be assumed to be random or representative.”

In a separate analysis by Patrick Ball and Megan Price of HRDAG, they point out:

“… we are skeptical about the use of IBC for quantitative analysis because it is clear that, as with all unadjusted, observational data, there are deep biases in the raw counts. What gets seen can be counted, but what isn’t seen, isn’t counted; and worse, what is infrequently reported is systematically different from what is always visible… As is shown by every assessment we’re aware of, including IBC’s, there is bias in IBC’s reporting such that events with fewer victims are less frequently reported than events with more victims.”

Ball and Price go on:

“We suspect there are other dimensions of bias as well, such as reports in Baghdad vs reports outside of Baghdad… The victim demographics are different, the weapons are different, the perpetrators are different, and the distribution of event size varies over time. Therefore, analyzing victim demographics (for example), weapon type, or number of victims over time or space — without controlling for bias by some data-adjustment process — is likely to create inaccurate, misleading results.”

From this, I conclude:

“… This means that extrapolating general trends in types of violence from an IBC-style convenience dataset, which is inherently selective, is a meaningless and misleading exercise. It cannot give an understanding of the true scale and dynamics of violence. Yet this is precisely what IBC-affiliated researchers have been doing.”

Having made sure to omit that critique of DWI, Dean says:

But here’s how Nafeez Ahmed characterises DWI:

It is not surprising that IBC’s DWI is seen as such a useful tool by NATO. The inherent inaccuracy built into the DWI means that it systematically conceals and obscures violence in direct proportion to the intensification of violence. In the hands of the Pentagon, DWI provides a useful pseudo-scientific tool to mask violence against civilians.

This is misleading in nearly every respect. As pointed out above, DWI is not “IBC’s” in any sense. There’s no “inherent inaccuracy built into the DWI” — any inaccuracy present derives from the data used with it. It’s obviously false — and absurd — to assert that DWI (which is a simple ratio, in itself neutral) “systematically conceals and obscures violence in direct proportion to the intensification of violence”.

Compare this to what HRDAG actually say about my analysis of IBC’s methodology, and of the DWI tool created by IBC researchers, and applied to the IBC database to produce flawed analyses of violence in Iraq:

“We welcome Dr Ahmed’s summary of various points of scientific debate about mortality due to violence, specifically in Iraq and Colombia. We think these are very important questions for the analysis of data about violent conflict, and indeed, about data analysis more generally. We appreciate his exploration of the technical nuances of this difficult field.”

So HRDAG generally agrees with my critique of the DWI tool, which draws extensively on their work. Dean, of course, doesn’t.

He’s at liberty not to, of course.

But given that the DWI was adapted by NATO to sanitize war crimes, even according to the analysis by IBC’s own former consultant Prof. Marc W. Herold, I’m not sure why Dean’s ignorant objections and obfuscations matter.

Nafeez Ahmed, for example, writes that the journal (Defence and Peace Economics) which published Spagat’s 2008 paper is “ideologically slanted toward promoting and defending US global hegemony”, and that it is a journal “whose editors and peer-review network would lean ideologically toward publishing a fraudulent paper” (specifically one which was critical of the 2006 Lancet Iraq study).

Based on my full analysis for Spagat’s Defence and Peace Economics paper (which was actually published in 2010, although it was accepted for publication in 2008), as well as of the editorial/review context of the publication of his paper, I stand by this. The ideological leaning of that journal is hardly a secret, but openly discussed within the journal itself, as I documented in my main article.

You wouldn’t think the rhetoric and double-standards regarding peer-reviewed journals could be ratcheted up more than that, but in the next paragraph, Ahmed writes that there should be “a formal investigation” into IBC’s “capacity to garner legitimacy by publishing in scientific journals”. Perhaps such an investigation would show that the editors of journals which publish material by “IBC-affiliated researchers” (including The Lancet, PLOS Medicine, New England Journal of Medicine, Nature, etc) have “ideological leanings” which Ahmed doesn’t approve of.

Actually, as Dean again ignores, I wrote this in my Insurge piece:

“That they have been able to publish these deeply misleading analyses in scientific public health journals is a disturbing indication of how little the academics sitting on the review boards of these journals actually understand the on-the-ground dynamics of conflict.”

But Dean would rather put words in my mouth.

Tirman has said that a $46,000 OSI grant was received on 4 May 2006, and that the Lancet survey itself was started in “late spring [2006]”.

Dean’s bullshit knows no limits it seems. This is like comparing apples and oranges. Dean ignores the fact that Tirman, the MIT scientist who commissioned the Lancet survey, clarified this, as I quoted in the piece Dean is trying to refute:

“Open Society Institute funded a public education effort to promote discussion of the mortality issue. The grant was approved more than six months after I commissioned the survey, and the researchers never knew the sources of funds. As a result, OSI, much less George Soros himself, had absolutely no influence over the conduct or outcome of the survey.”

I’ve clearly documented, from multiple scholarly sources, that USIP does suffer from ideological biases in support of US foreign policy goals, and that more specifically USIP suffers from deep-seated and quite unambiguous biases with respect to the Iraq War, and the sources of violence in the war. Dean shows nothing of the sort in relation to OSI, whose day-to-day grant-making decisions are not made personally by George Soros.

The OSI funding was granted before the Lancet survey was started, yes — but it was also granted 6 months after the survey was actually commissioned by Tirman. So not only did the OSI funding have no relevance that might pose a conflict of interest, it was purely related to funding public discussions about “the mortality issue,” namely to “pay for some travel for lectures, a web site, and so on.”